In a controlled environment such as a user lab, infra-red eye tracking is highly reliable and delivers the most accurate data. But as our world has shifted to remote work, the demand for at-home usability tests has skyrocketed, and many businesses rely on online solutions and services.

“If the drop-off rate is taken into account, webcam eye-tracking still delivers solid results.”

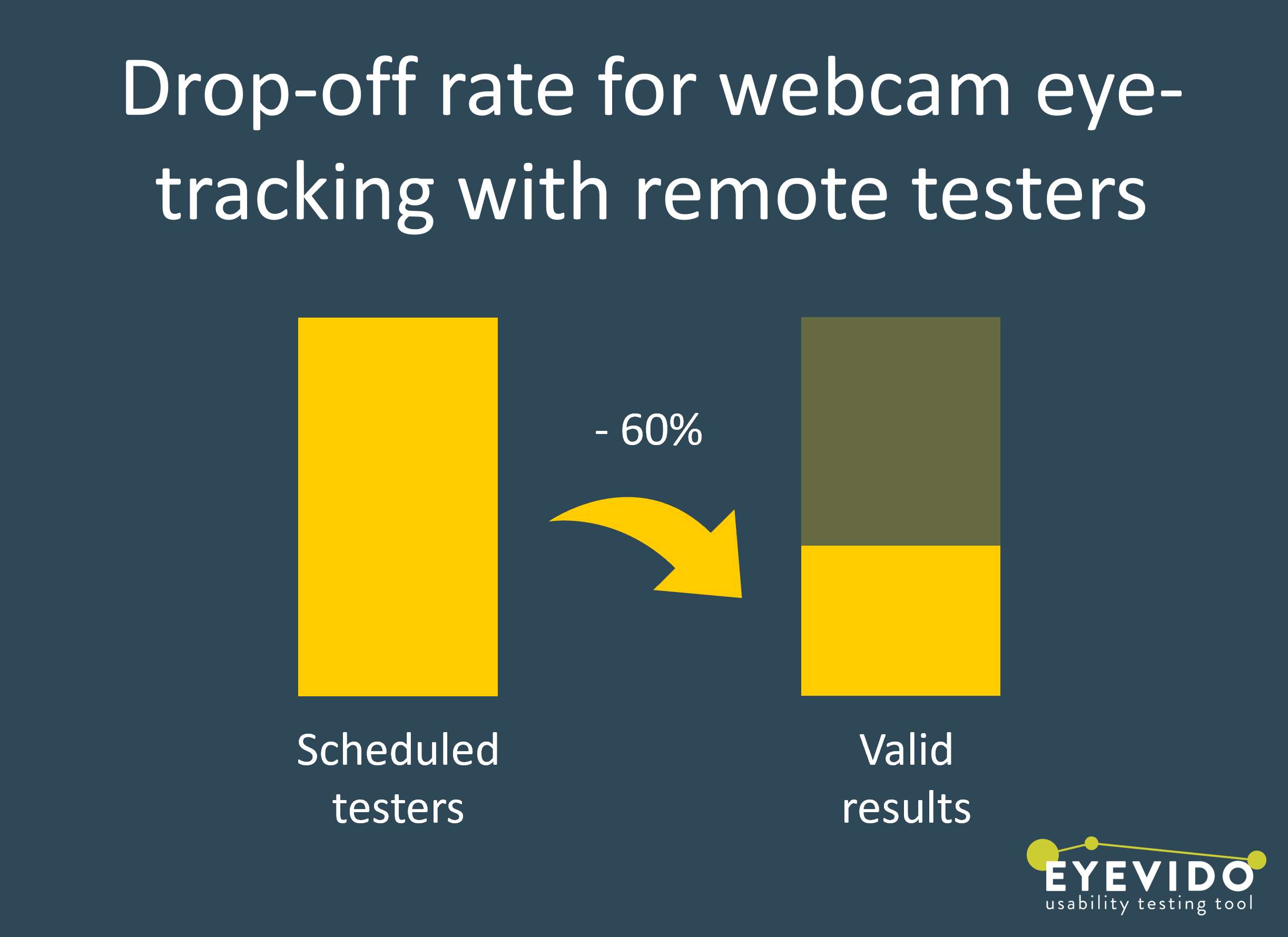

Naturally, webcam eye tracking sounds very promising, as participants no longer have to attend in-person to take part in tests. Webcam eye-tracking is more susceptible to errors than infrared eye-tracking, so does every remote tester deliver quality webcam eye-tracking data? The answer is no. There is no way to tell beforehand which users will have difficulties with the calibration or if users are taking part in unfavourable environmental conditions. With these external factors, it is hard to gauge how many test users must be recruited to obtain the desired data sets.

But that does not mean that webcam eye-tracking isn’t a viable solution. If the drop-off rate is taken into account, webcam eye tracking still delivers solid results. We published our findings on how many testers are needed here: Webcam Eye Tracking: Study Conduction and Acceptance of Remote Tests with Gaze Analysis

“To demonstrate that the webcam eye-tracking data wasn’t random but accurate, we verified that the well-known gaze-cueing effect could be detected on the screenshots.”

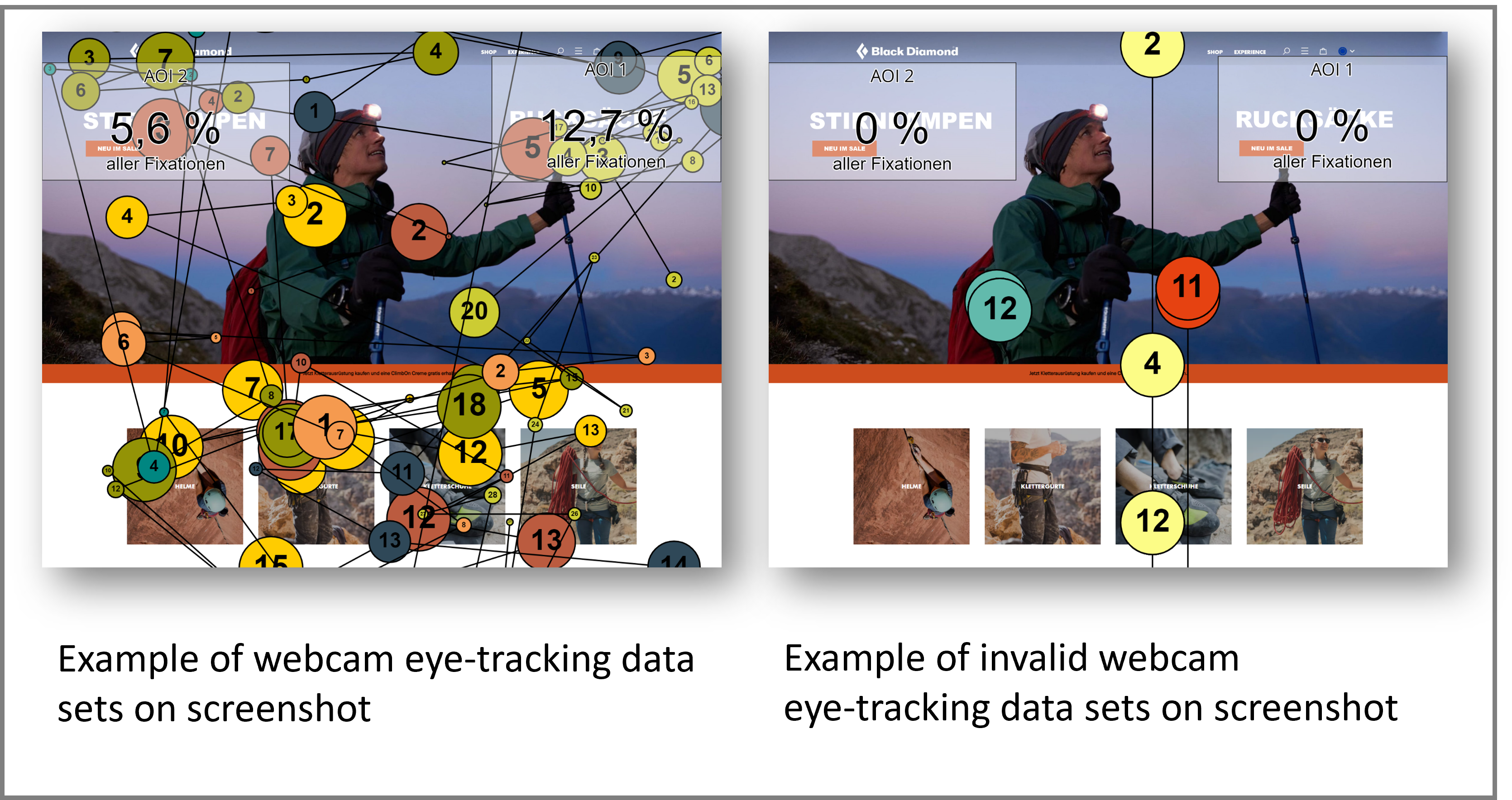

57 remote testers signed up online to take part in a simple webcam eye-tracking study and were given a participation link via email alongside helpful tips such as keeping head movements minimal. Once they clicked on the link, the EYEVIDO Lab tester interface opened up in their browser and started calibrating their webcam. They were tasked to look at scrollable screenshots intuitively, just as if they were browsing at home.

To demonstrate that the data from webcam eye-tracking wasn’t random but accurate, we verified that the well-known gaze cueing effect* could be detected on the screenshots.

*When we see an arrow or another person’s gaze, our attention shifts towards the same direction.

“At the end of the day, webcam eye tracking can provide accurate data, but a high drop-off rate must be taken into account and compensated by an over-recruitment of about + 150 %.”

About 40 % of testers had valid results. In our evaluation with EYEVIDO Lab, we could visualize behavioural scan patterns and correctly identify the most focused areas with heatmaps and AOIs. External conditions on the participant’s side led to invalid data recording for some, and others could not get past the calibration. These two issues were the main culprits for the drop-off rate of about 60 %.

At the end of the day, webcam eye tracking can provide accurate data, but a high drop-off rate must be taken into account and compensated by an over-recruitment of about + 150 %.

We made all our insights on this topic publicly available here: Webcam Eye Tracking: Study Conduction and Acceptance of Remote Tests with Gaze Analysis